Many marvel at the immense computing power available in modern day wearable devices. However, few appreciate the many sensors that work together in these devices. Wearable electronics contain many sensors that continually collect data. Picture a smartwatch or personal health monitor. It is the collaboration between different sensors that differentiate a step that you take with your feet from everyday general movements. The sophistication behind interpreting the sensors’ output to provide useful information is truly astounding.

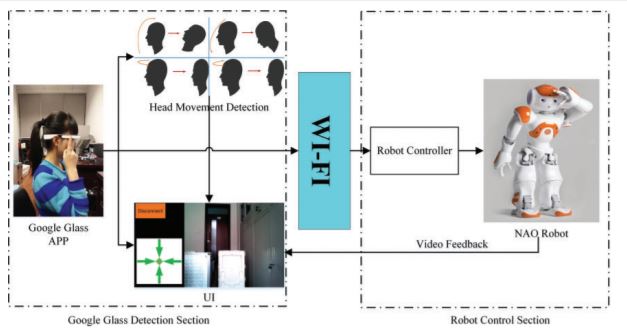

Google Glass, a smart pair of eyeglasses, contains many software-based and hardware-based sensors including accelerometers, geomagnetic sensors, and gyroscopes. The data from the different sensors is combined, in real-time, to interpret the wearer’s actions. This process of combining of data collected from different sources is called data fusion. Wearable microprocessors provide the computing power for the complex computations in data fusion. New developments in multi-sensor fusion allow Google Glass wearers to control a humanoid robot using head gestures.

Source: Mao et al, Creative Commons License. 3.0

Google Glass communicates with the humanoid robot using a standard WiFi connection. The humanoid robot used in this research was called NAO, pronounced “now”. The sensors’ initial data, or raw data, is noisy. Noisy data contains true, real-world values measured with additional extraneous information. Most of this information is due to random electricity changes or electrical interference of nearby devices. (Think of when speakers hum near a lamp with a dimmer switch.) This raw data is too noisy to be used to determine the operator’s head gesture, so the researchers set a threshold to remove noise so only the relevant signal information remained. Data fusion was performed on this filtered sensor data from the accelerometer, geomagnetic sensor, and gyroscope. The wearer’s head gesture can now be accurately identified, as shown to the right. Using indicators of head motion from each sensor, the wearer’s head gesture is more accurately identified than using a single sensor.

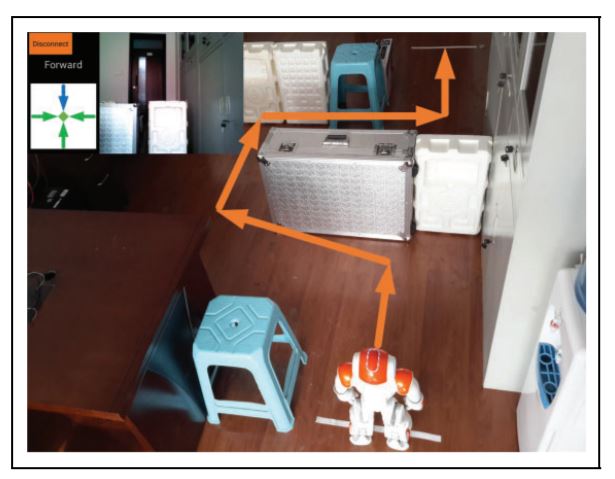

Four types of head gestures were used to direct the robot. Head up directed the robot forward, head down stopped the robot, a turn of the head to the left turned the robot left, and a turn of the head to the right turned the robot right. People were able to smoothly navigate the NAO robot through a simple maze, as shown the picture below.

Simple robot maze. Source: Mao et al, Creative Commons License. 3.0

The hands-free control of robotic devices will soon be able to provide assistance to elderly or disabled patients with daily life. Someone could soon control their wheelchair with the tilt of their head.

Although the computing power available in today’s wearable electronics is impressive, wearables would not be possible without the data fusion of the sensors in these devices. Using head gestures, Google Glass users are able to control a humanoid robot in four directions. Research and development in multi-data fusion will allow for the identification of even more head gestures that can be paired with different robot controls. Future robot controls may include more directions, such as reverse movements and reaching the arms upward. Controlling robots with wearables will soon provide novel and valuable assistance to anyone who might need an extra set of hands.